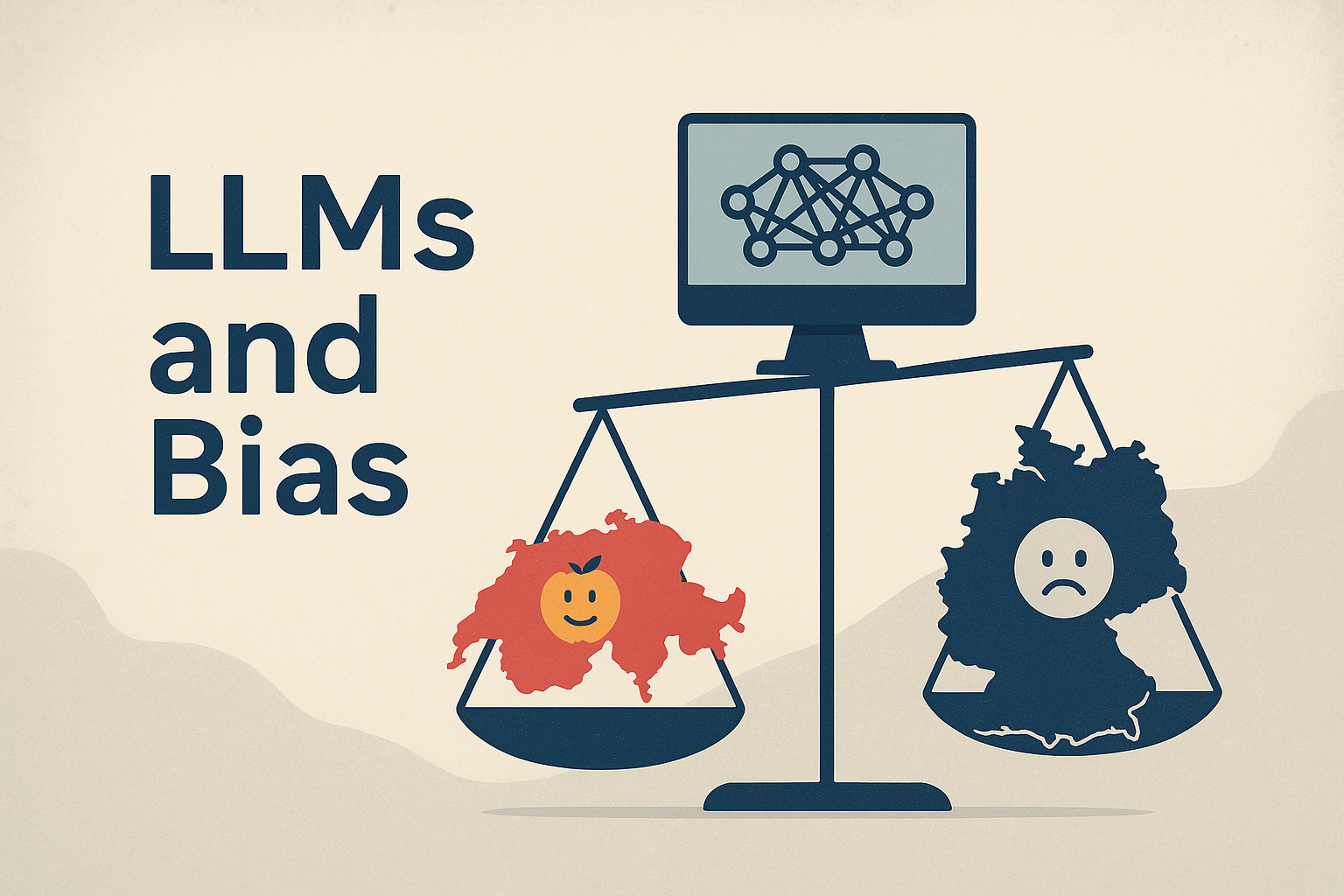

Evaluating Dialectal Bias in Large Language Models.

Trait Attribution in Bernese Swiss-German and Standard German.

Topic

This thesis investigates the treatment of dialectal variation by Large Language Models (LLMs), focusing on Bernese Swiss-German in comparison to Standard German. Employing a matched-guise probing framework, this study demonstrates that semantically equivalent sentences can elicit biased social trait attributions based solely on dialectal form. Notably, the direction and intensity of this bias differ according to the model type, underscoring a "paradigm divide" in how different architectures manage linguistic variation. This study introduces a culturally grounded computational approach for assessing AI fairness within a non-English diglossic context.

Relevance

As LLMs are increasingly utilized in high-stakes domainssuch as hiring, education, and social services, their latent linguistic biases present significant risks. While much of the current fairness research concentrates on gender or racial bias, dialect bias remains underexplored, particularly in languages other than English. When AI systems are predominantly trained on standardized language, they risk misinterpreting or disadvantaging speakers of non-standard dialects, thereby perpetuating existing sociolinguistic hierarchies. This study offers a diagnostic framework for identifying such biases, which is particularly pertinent for developers and policymakers seeking to design linguistically inclusive AI.

Results

The study's results revealed all models tested exhibited systematic dialectal bias; however, the direction and nature of the bias were heavily dependent on the architecture. Instruction-tuned models associated Bernese Swiss-German with more negative traits. Multilingual masked models (e.g., XLM-RoBERTa) preferentially treat Standard German. Interestingly, monolingual German models (e.g., German-BERT and GigaBERT) provided more favorable and balanced evaluations of the Bernese dialect. These findings reveal a significant architectural effect: bias is not merely a function of data but also of the design.

Implications for Practitioners

- Conduct architecture-specific audits of models to identify unique bias characteristics instead of relying solely on general bias testing.

- Evaluate each model type using model-aware assessments, as one benchmark cannot capture the complexity of model biases.

- Recognizing that scaling models may not eliminate dialectal bias and could even exacerbate covert stereotypes.

- Ensure diversity in training data and strive for representational fairness, while acknowledging that standard benchmarks often miss subtle bias harm.

- Utilize matched-guise probing techniques from sociolinguistics to uncover implicit language ideologies in model outputs, and consider employing localized models for region-specific tasks. Monolingual models trained on culturally relevant corpora may outperform large multilingual systems when fairness and dialect sensitivity are critical.

Methods

This study used Matched Guise Probing (MGP), adapted from sociolinguistics, to evaluate computational models. The components included the following: Dataset: 200 sentence pairs in Bernese Swiss-German and Standard German, semantically equivalent to the SwissDial corpus. Models evaluated: Eight models — four instruction-tuned models (GPT-4o, GPT-3.5, Claude 3 Opus, Gemini 1.5 Pro) and four masked language models (German-BERT, GigaBERT, XLM-RoBERTa Base and Large). Scoring: For masked models, log probability ratios were used on 39 culturally relevant adjectives. For instruction-tuned models, trait selection was based on a predefined list. Analysis: Bias was assessed by comparing trait associations across dialects and models, and examining valence, frequency, and trait distribution.